SAE Noise & Vibration Conference and Exhibition

Every two years the Society of Automotive Engineers (SAE) holds a noise and vibration conference in Grand Rapids, MI, that is of great interest for anyone working with automotive audio. This year’s SAE Noise & Vibration Conference and Exhibition, May 12-15, proved to be equally appealing. A great content program, great discussions with the experts and valuable perspectives from noise and vibration experts.

AI tends to be perceived as more of a contextual function, much like a co-pilot, determining better design changes or simulation building through awareness of the problem and suggesting efficient ways to attempt to solve it. Nevertheless, both AI and ML are data driven technologies. The NVH and automotive industry has decades of data available and is beginning to apply it to old and new problems, optimizing audio system layouts and structural improvement for both comfort and sound quality. Setting the goals for this emerging technology requires a good basis in the methods that have been used.

The conference program opened with a Vehicle Development and Active Sound Design Workshop sponsored by HEAD acoustics. It featured Stefan Hank and Wade Bray from HEAD acoustics, and Thomas Wellmann, FEV NA Inc., exploring the approach to active sound design for battery-electric vehicles (BEVs) and hybrid-electric vehicles (HEVs).

Thomas Wellmann gave a comprehensive overview of how noise sources are located and decomposed for analysis to determine which source is primary and secondary, and so on, then separating them, controlling them in design, and transferring paths analysis for ICE, BEV, and HEV vehicle design. He also discussed how to meet the targeted external sound levels for the vehicle to meet the AVAS FMVSS 141 standard.

With this understanding of how we can control noise sources, Stefan Hank, a principal sound designer for HEAD acoustics, explained why creating an active sound design allows us to improve the perceived sound quality, and give the car a character that creates an emotional connection, and portray quality. Sound design can also effectively expand human-machine interface (HMI) features, enhancing comfort, building trust, safety, and information – generating a positive user experience. The presentation detailed how sound design can take place in a virtual workspace, using measurements of the vehicle to take into account wind, road, and powertrain noises. Making a car’s total and dynamically changing sound through sound-layering model creation, interactive optimization in virtual tuning, then vehicle integration, is certainly a creative and captivating process.

This leads to the question of whether in the end the sound was better. An engineer from a major electric vehicle manufacturer asked the audience if they would leave the sound always turned on. About 10% said they would. When asked if they would keep it on for spirited driving, the number was close to 30%. Most said they would turn it off for listening to audio sources.

Wade Bray rose up to say, “The artificial sound can sound small compared to the broader audio sound systems – perceived artifacts will be rejected as fake, presentation with care to not do harm is important.” Based on some of his work from the mid-1980s, “there is a way to control the near field sound so that the effects that are created are perceived non-homogeneously at all the speakers (i.e., randomizing the presentation to each), so the brain is not disturbed by and focused on the effect that is being presented.” A method that has been used for increasing perceived reverberant field diffusion in systems that provide electro-acoustic reverberant enhancement to rooms.

On the day of his 80th birthday, Wade Bray later offered what could be considered a benevolent sermon about perceived sound quality – he even evoked the Pontiff in Rome and the Sistine Chapel. It involved “Practical Work Tips, New Standards, the Human-Centered Soundscape,” which was the theme of the conference’s Sound Quality workshop. It focused on productive practical techniques for information extraction, and new measurement standards and metrics for different psychoacoustic “sound complications” directly comparable in perceptual strengths (found in the standards: ECMA-418-2 and DIN 38455).

Wade began with the human-centered soundscape: “context and other senses” (based on the standard, ISO 12913-1;-2,-3). Sound perception always includes metadata (data about the data). Data, plus metadata equals meaning. Metadata provides context; data is hearable situations or recordings and appropriate technical measurements. The data and metadata integration and assembly is done by the brain to form meaning and sound perception. All combine in sound quality. Soundscape is the acoustic environment as perceived, experienced, and understood. It is not so much subjective as it is perceptual, because it does require the appropriate measurements that capture our perception.

He described the factors that influence a hearing event – masking, sound impression, spatial distribution, and motion, as well as selectivity (at-wall or automatic attention command) and complex phase relationships. This led him to the argument against a single microphone measurement. “I cannot measure the ceiling of the Sistine Chapel with a single laser beam measurement of distance. I cannot see the shape of the chapel ceiling with a single point measurement.” At the very least, binaural technology is imperative to perception.

Wade’s view is to measure what we need, so we can see the data in the way we hear it, so we can trust our own perception and think intuitively. The first order metadata is our brain process, the second is the soundscape and how it is measured, the third is the human’s CPU, the brain.

This requires making multiple types of measurements simultaneously – modulation (fluctuation), roughness, spectrum (tonality), and loudness – and design with all of them taken into account (as according to the ECMA-418-2 (2022-2025) standard). Just make sure the time step in the measurement is shorter than the event that is being measured, because this is how we will perceive the data, he added. This way, psychoacoustic metrics can be relied upon and trusted.

Our brain deals with conflicting information it receives. Music perception without seat vibration can change the technical evaluation. The auditory data can be overridden by the visual context (the McGurk effect). There is a need to include the visual information of being in a car space, even when listening to binaural playback measured or simulated data. The ISO 12913 Classification of a Sound Event makes perception objective. If we keep in mind the effects of the data about the data, we will make the detailed measurements we need to see the data as we hear it. The same is true of ML data that will teach a simulation model.

The collection of large data sets that are used to build ML models for structural vibration optimization and acoustic enhancement can be viewed in the light of Wade Bray’s overview of perceptual sound quality. How to add sound design without disturbing the brain and causing the listener to complain needs to be a part of any AI and ML solutions that we will use as we navigate evolving vehicle NVH challenges with the application of those emerging technologies.

More Thoughts About AI/ML

The multifaceted, fast-paced evolution in the automotive industry includes NVH behavior of products for regulatory requirements and ever-increasing customer preferences and expectations for comfort. There is a pressing need for automotive engineers to explore new and advanced technologies to achieve a “First Time Right” product development approach for NVH design and deliver high-quality products in shorter timeframes. AI and ML are transformative technologies reshaping numerous industries, enabling machines to replicate human cognitive functions, such as reasoning and decision-making. And ML allows us to employ algorithms to learn and improve from data over time.

For this, we need trained models with lots of data. At this SAE conference, AI and MI permeated many discussions, including the round table discussion on “PrototypeLess Vehicle Development Programs and NVH Engineering: Rainbow Chase or the New Horizon,” with Mark L. Clapper (Ford Motor Co.), Eric Frank (Hottinger Bruel & Kjaer), and Michael Winship (AVL Michigan Holdings). There was a sense of frustration with those on the panel and the questions from the audience. There was still more understanding needed about the tools at hand.

Gaurav Agnihotri (Stellantis) had a similar experience during his “Chat With The Experts” session about AI and ML in NVH. There are many key discussion points for AI/ML in NVH testing and simulation, as there are for any audio test and simulation. Because there is very little previous work, the discussion tends to be about what can be done. At times, some attending the conference largely ignored the reality and context of the present day. Many had questions about how AI could solve their problems and what challenges they face in their daily workflows. Some questions seemed absurd and some were valid. We still treat AI as a magic black box, and we just want it to solve all our problems. Gaurav explained more than once, that “the understanding must be that AI/ML won’t create data that doesn’t exist.”

We are at a discovery stage. The gist of Gaurav’s key discussion points in testing were: How can AI/ML elevate root cause analysis from complex spectral data? (For example, automated anomaly detection in acoustic signatures, intelligent classification of NVH events.) Can transfer learning and advanced signal processing (deep learning for source separation) truly help us diagnose novel NVH issues faster and more reliably across diverse vehicle platforms? (The challenge of “Unknown Unknowns.”) And, where is the immediate low-hanging fruit for AI/ML to significantly reduce testing time and improve diagnostic accuracy in our current workflows?

And, in bridging the reality gap between test and simulation: How can AI/ML, such as physics-informed neural networks (PINNs) or digital twins, address the inherent deficits in simulation models when faced with real-world manufacturing variability and complex interactions? Is there a chance of creating a unified test and simulation ecosystem? What are the most promising AI/ML techniques for improving test-simulation correlation and creating hybrid models that leverage the best of both physical and data-driven approaches?

Even though those and many other key discussion points were largely ignored by attendees, Gaurav was able to demonstrate how to use Microsoft’s Copilot to process text training data, web data, images converted to text, and structured data, to predict a logical output. He used a Large Language Model (LLM) Agent Orchestrator communicating an LMS COM API for LMS Test Lab.

Some at the conference working in AI and ML also tried to address the future key discussion points from Gaurav. In the area of electric vehicles, there was discussion about sound branding and proactive issue detection, and how generative AI and sensor fusion can support NVH design. The potential of deploying AI/ML for real-time, in-vehicle NVH monitoring and diagnostics was also discussed.

Training AI for Simulation

Bhaskar Banerjee, from ANSYS presented “Cabin Noise Modeling for Seat Location Variation Using Simulation and AI.” The challenges and variables essentially set the goal: to create a simulation model that can predict the change in sound from each loudspeaker for each listener with each change in seat location in different cabin sizes and with the small variations in seat position for a given cabin. Where should each speaker be located to optimize the received sound?

The concept to solve the problem and the process to validate the AI-driven simulation model (SimAI) were conceived in 10 steps overall. According to Banerjee, during the finite element method (FEM) process, we should create the cabin model with assumed speaker location, level, cabin shape, and seat configuration. Then, solve and confirm that the driver and passenger ear sound levels are reasonable. For creating the training data, define the initial range for input and output parameters. Then, solve for multiple the Design of Experiments (DOEs) within the range and also for each frequency interval to create training data for SimAI.

For validation of the SimAI, Banerjee proposed randomly generating new test examples from the training data range and use SimAI to run predictions. Then, compare the results of the SimAI prediction with actual solved results for the same configuration. For a new cabin design, create a modified model with seat movement but same speaker locations. Then, solve for a new DOE with the modified model and create more training data with different seat locations. For overall validation, randomly generate test cases with new seat locations and use SimAI for prediction. And, finally compare the results predicted by SimAI and a detailed solving for the modified cabin. The results of things were presented.

The base model for generation of the training data for 1600 data points currently takes 6-10 days to solve (!). Once the AI model is taught, the base model is no longer needed. The resulting model runs in 10 minutes, and the AI result is in 5 minutes. Then the model for the change in seat location is run for 1600 data points again. Further work allows changes in the number of seats and cabin interiors, because the model keeps learning with each model run. This same process is also applicable to general room acoustics.

Using the same training data set for changing seats and interiors is valid as long as it isn’t too much of a change (300mm increase in cabin size will cause faults). A FEM was used because it captures the variation more accurately in the 3D space (rather than Boundary Element Modeling, or Ray Tracing). FEM is more expensive in terms of time to solve and model size, but its solution has more value also (up to 2000Hz).

In the area of simulation to reduce Buzz, Squeak, and Rattle, Jagadeesh Thota (Hyundai Motor India Engineering Pvt., Ltd.), Seungchan Choi, and Jong-Suh Park (Hyundai Motor Co.) presented “A Study on the Subwoofer Rattle Noise Analysis and System Target Setting.” Critical stiffness levels were determined from a case study to keep the mounting stiffness for a subwoofer to avoid unexpected NVH issues.

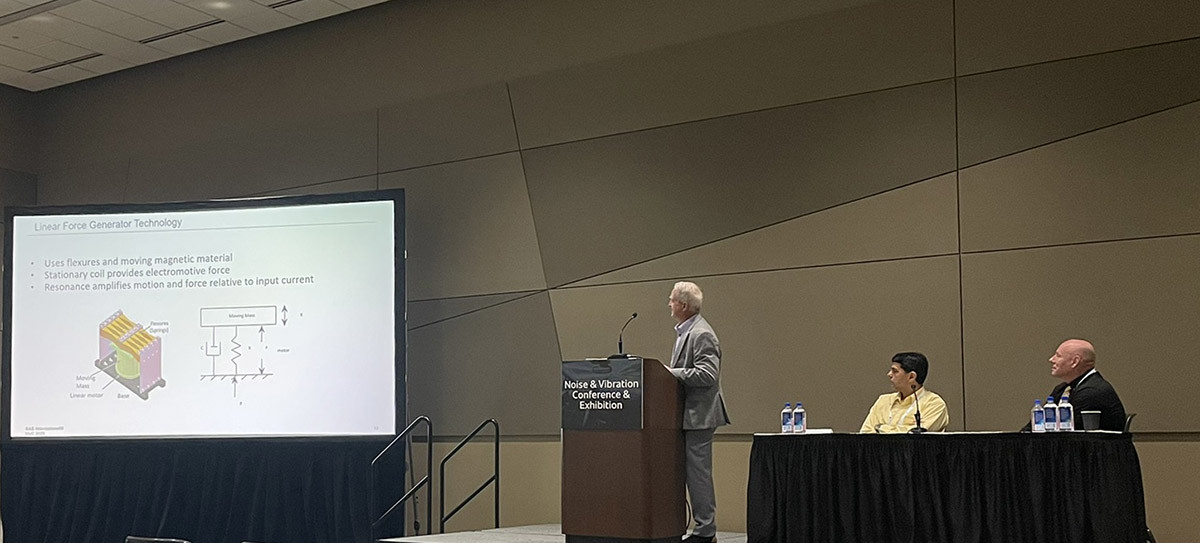

For global vehicle vibration “enhancement,” Mark A. Norris (Parker Lord), and Jeffrey Orzechowski (Stellantis), Brad Sanderson and Andrew Vantimmeren (GHSP), and Douglas Swanson (Parker Lord) began by talking about how to reduce the second order vibration in the RAM Truck with a Hemi engine when cylinders are deactivated for fuel savings. They avoided active engine mounts, pendulum measures, and linear actuators with complex mounts and used a Circular Force Generator (CFG).

There were bending and torsion modes in the frame to control CFGs, which were more efficient and easier to mount on any configuration. They were lower cost (less rare earth materials) and had higher range of forces at lower frequencies, and lower weight (less than 0.5kg). After removing a vibration, the CFG was then used for vibration enhancement for low-frequency acoustic enhancement to assist the audio system to simulate ICE vibrations and sound. This they called “eVibe.” Audio enhancement made the simulated engine sounds seem more realistic instead of seeming to be fake imitations.

And, finally, the conference also addressed the realm of alternative mobility – urban air mobility, including eVTOLS (aircraft that are electric and vertical take-off and landing) – where sometimes quiet is too quiet. During the Fireside Chat session, Gregory Goetchius (Joby Aviation) talked about the unique sound design challenge of “rotor craft.” Cities such as San Francisco, CA, don’t allow helicopters because of noise, but eVTOLS are below the noise requirements, which is considered too quiet and AAS will be required for safety.

Our focus on the future clearly needs to be grounded in the past with a clear view to understanding the most useful data to evaluate, design and develop automotive acoustics. Key points from this SAE NVH conference include a better understanding of using ML and AI. Once that sense of hype has peaked, we will see the true power of using these emerging tools to build the cars and trucks that sound and feel the way we want them to, more efficiently and quickly. aX

This article was originally published in The Audio Voice newsletter, (#515), May 22, 2025.

Source link