CES 2025: An Automotive Audio Perspective

Roger Shively shares his Automotive Audio perspective of CES 2025. An evolutionary year, more tempered after the explosive nature of the software-defined vehicle becoming reality last year. Narrow and Deep AI, Machine Learning, and Generative AI had their roles in developing and maintaining most of the software and platforms for automotive audio at CES 2025. The “Ploughman’s Lunch” of Automotive Audio, Shively was going to call his chronicle from the automotive-filled West Hall and the Beach Lot across the street.

In contrast, CES 2025 was an evolutionary year, more tempered after the explosive nature of the software-defined vehicle becoming reality last year. Maybe that tempered feel made it seem easier to get a taxi or an Uber this year, or maybe that part was similar to last year, except the traffic flow hadn’t been redone for the construction on the Central Hall for the Las Vegas Convention Center. There were 140,000+ in attendance this year, which was about the same as originally reported last year. In the automotive-filled West Hall and the Beach Lot across the street, just as there was uncertainty in vehicle power-train development, there was a good deal of scenario planning for automotive audio also. There might not have been a wow, but there was a new year vibe.

For the automotive industry, 2024 has been a good year. 16 million vehicles were sold in North America, up 1.9% from 2023. That number is close to the pre-COVID levels, but not quite at the peak of 17.5 million in 2016, according to Crain Communications and Statista. EV sales are growing faster than the market, up 18% in 2024, now 6.6% of the market. Consumers are responding to more options, beyond Tesla and Chevy. Hyundai/Kia and Ford have more to offer.

The hurdles to EVs are still price and charging. Tesla is leading with price drops, and carmakers are still finding it hard to make a business from EVs ($6k loss on every $50k EV). However, electrification is the largest part of the development of the car product for the future. The charging network is growing considerably.

In addition to audio-based companies, like Sensory, many of the innovators at the Michigan-based Connected Vehicle Systems Alliance (COVESA) Showcase that filled a 22,000 sq-ft ballroom at the Bellagio on the first night were concerned about artificial intelligence, mobility, electrification, and improving the charging network. The clear takeaway for OEM carmakers in 2025 is that they see a future that is EV-based. In the near term, electrification is the path to the future, and that includes hybrids.

Narrow and Deep AI, Machine Learning, and Generative AI had their roles in developing and maintaining most of the software and platforms for automotive audio at CES 2025.

The Honda/Sony AFEELA vehicle project has been the all-in-one concept totem for a few years for all software-defined hardware and AI-driven interaction that is planned for automotive user experience. It stood in for this as well last year, but it kept its distance then. We couldn’t get within 3 feet of the exterior. This year we could get inside and experience it, and it is now taking orders for the first builds.

In case we needed to be reminded, AFEELA “seeks to capture a ‘FEEL’ for each of the unique sensibilities of customers in an instant, and then respond in a positive way.” This is the contemporary approach for all others working in today’s automotive audio: ANC, framework, platform, connectivity, immersive and tactile audio, mood and driver assist companion – all the things Harman, QNX, DSP Concepts, and Aptiv want to provide along with those who are prepared to engage, such as Bose and Arkamys.

Each of those providers is preparing, or has, next-generation computational performance that will optimize mobility experiences. Powerful computing performance is essential for creating this future-ready mobility, including advanced driver assistance and AI companions, through collaborations with semiconductor providers like Qualcomm Technologies, with the Snapdragon System on Chip (SoC) as the brain.

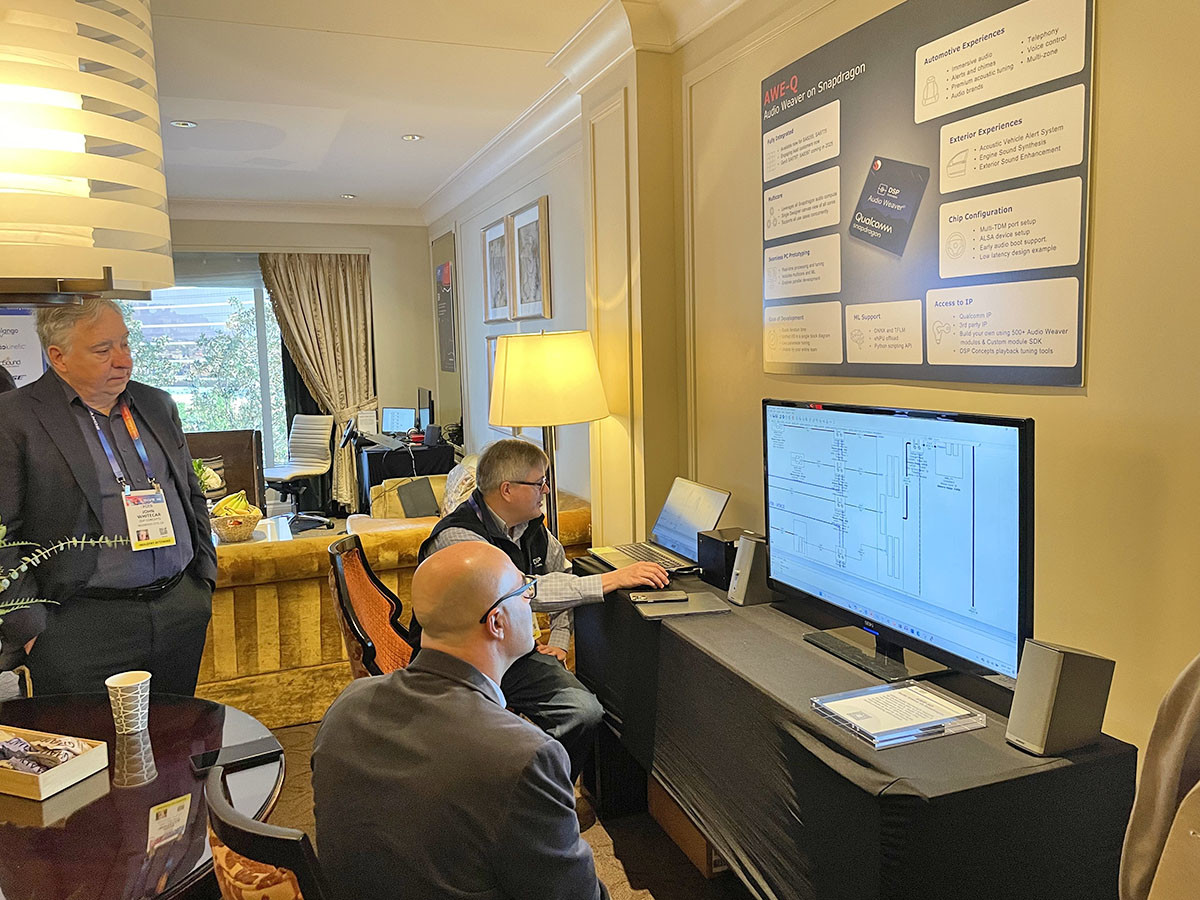

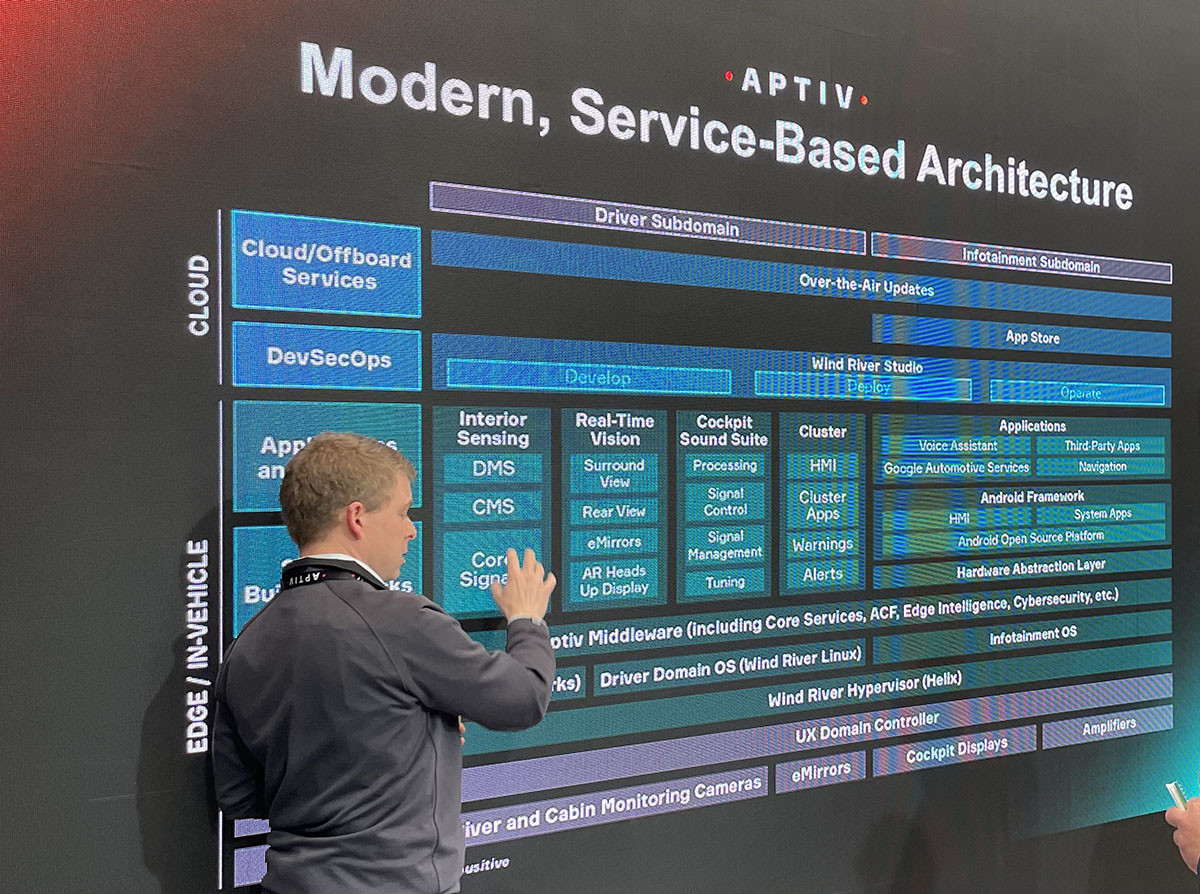

Platforms like DSP Concepts rolled out its Audio Weaver on Snapdragon (AWE-Q), demonstrating great processing speed and record-low latency of 1.6 msec. Frameworks, such as QNX, are also supporting Qualcomm and MediaTek. Aptiv is now supporting the software-defined vehicle with its Service-based Architecture.

This has resulted in the creation of some of the world’s most advanced intelligent mobility systems. Innovative Electrical/Electronic architectures connecting the vehicles’ ECUs and sensors through advanced networks, maximizing overall vehicle performance, but emphasizing In-Vehicle Infotainment (IVI), utilizing multiple SoCs.

The systems prioritize data across domains, and the new architectures are designed to centralize multiple functions, instead of having separate ECUs for each function. With this setup, external data captured by autonomous driving or driver alert sensors can be immediately transmitted to the IVI system for rendering as 3D graphics and modifying the driver’s experience for comfort and preference. Most of this is accomplished through partnerships from feature providers and developers and managed by a given OEM integrator or framework provider working together with them.

Harman’s brands were literally on the shelf around their demonstrations of artificial intelligence as Automotive Intelligence. Much in the way other providers are offering a Ploughman’s Lunch of pre-bundled features prepared not just for preservation and use of data and intelligence, but prepared also for different flavors, some ready for “white label” re-branding.

Harman’s proposition in its demos was that with a powerful central compute platform that is ready for level 2-plus autonomous driving as well as cockpit and driver alert experience all in one box, led by proactive artificially intelligent companions, were the lead for providing the comfort and infotainment quality for the vehicle inhabitants.

They broke their demonstrations up into three zones: Collective Intelligence, where all the products that Harman provides come together in a car to create an empathetic experience detecting mood and health. Perceptual Intelligence where they concentrate on emotion – sound, vision, and tactile feedback. And, User-Centric Intelligence about making the car a place where you feel safe, where you feel welcome, the car is friendly, and it can predict the user’s needs.

The demos culminate in a musical experience in a vehicle with the Harmon/Kardon brand, which included a seat-based speaker architecture, with loudspeakers mounted below, within, and above each seat in the car. It included mood lighting, and seat-based personalization for an immersive audio mixer, which allowed every seat in the car to independently adjust their level of audio immersion and bass impact.

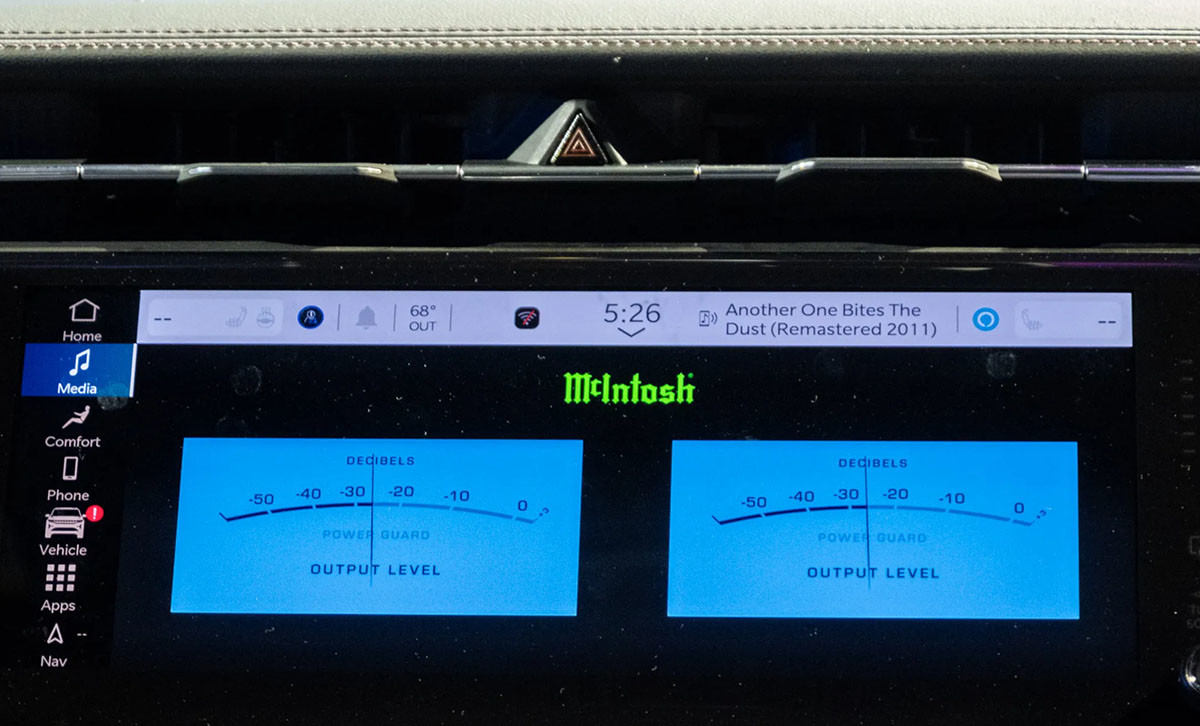

Bose has been as much a tech company as an audio company in the past. This year, it was audio that Bose demonstrated mostly. The Cadillac Escalade was available, as was its next-generation Atmos-enabled speaker system in a GMC Denali, which will be shipping this year. Its biggest news was the acquisition of the McIntosh and Sonus faber brands. And, on hand was the Jeep Wagoneer S with the McIntosh brand.

Another provider with 100 million cars on the road was Arkamys. They demonstrated Road Noise Management, based on a given car’s microphones and sensors, providing simple narrow-band cancellation. And, they demonstrated Media Enhancement. New for them this year was to be embedded on NXP Quantum and HiFi 5 from Cadence via DSP Concepts Audio Weaver. Their roadmap is to be prepared for the software-defined vehicle, and be ready to engage their customers at any level.

Arkamys had demonstrations of Digital Premium On Demand, showing they have their own infrastructure ready to go (AWS-hosted cloud services, powered by Salesforce), providing hardware-free upgrades, with features on demand. They demonstrated Premium Audio Sound Signatures as add-ons to existing audio systems, partnering with third-party branding via Boston Acoustics, as an example of a white-label branding of premium sound.

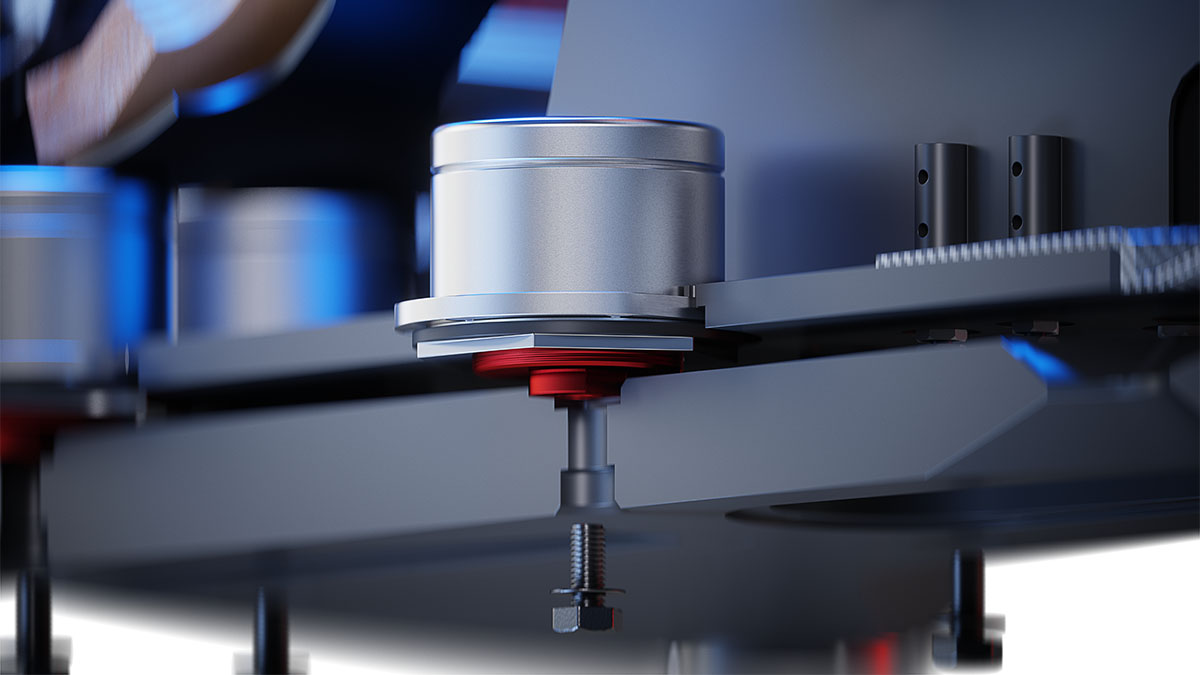

Sonified, part of the Trèves Group, and a member of the Audio Foundry technology collaborative, partnered with Denon, Dirac, and Tymphany to implement and demonstrate an improved application of headrests with their Linear Transducer Technology. The transducers are exciters integrated to the headrest materials and into a volume space of the headrest. This brings together the expertise in materials science and transducer technology at Treves and Sonified. Headrest and headliner demonstrations were given, as well as a full system demo with Tymphany woofers. All were tuned by Dirac.

The demonstration this year was much improved over the concept presented last year. The marrying of all the technology specialists highlighted each of their own qualities and how they could combine those disruptive ideas into a practical application in automotive.

At the Xperi pavilion, Mercedes-Benz came together with Sony Pictures Entertainment’s RIDEVU In-Car Entertainment Service in a Mercedes-Benz E-Class to feature fully immersive IMAX Enhanced Films. The service is fully integrated with some OEMs, including Mercedes-Benz as the first global automotive OEM. The service enhancement introduces an immersive in-car entertainment experience, bringing the largest catalogue of IMAX Enhanced movies from Sony Pictures. IMAX Enhanced is the only way to experience IMAX’s signature picture and sound outside of a theater. RIDEVU delivers select IMAX Enhanced films featuring IMAX’s expanded aspect ratio (EAR), IMAX’s proprietary DMR process, and DTS:X sound.

Artificial Intelligent Voice Assistants were a topic of discussion. AI Voice Assistance has pushed back on automakers’ attempts to take the control of the central infotainment center back from smartphone mirroring services such as CarPlay. Apple and Google have been cementing their dominance in a new way — artificial intelligence-enabled voice assistants. “Even within the vehicle, you’re competing with what’s on your mirror link or screen projection-type protocols, what’s in your phone,” said Aakash Arora, the North America leader of Boston Consulting Group’s automotive and mobility sector. The automakers are not only “competing with other OEMs, they may be competing with their own apps.” Large language models are used by Apple and Google to parse speech and speak in polite and relaxed ways.

However, more established companies in the space, such as SoundHound AI, are also leveraging OpenAI’s ChatGPT and Silicon Valley’s other large language models. Some integrated voice assistants, such as SoundHound’s system, can also parse whether computing should be done on board the vehicle or in the cloud, making these products functional without a mobile connection.

“The ability to personalize and access your own personal information and lifestyle and services,” will be a lot more powerful than it is today, said Alex Oyler, director at global automotive research firm SBD Automotive. Automakers “can’t build a competitive experience because they don’t have the data.” While automakers strive to build visual interfaces that can compete with big tech, voice assistants represent the next battleground for a key consumer touch point.

Already, manufacturers outsource the building blocks of voice assistants — automatic speech recognition and natural language processing — to third parties such as SoundHound AI, Cerence, and Amazon. Google also has a built-in native voice assistant for automotive use. Building a voice assistant from scratch would involve advanced speech-to-text conversion and processing, which most automakers are not interested in taking on.

Sensory is working in this area also. Sensory’s innovations in speech recognition, emergency vehicle detection, voice assistants, biometrics, and natural language understanding are a part of industries like automotive. Carmakers can create their own in-vehicle voice assistants, or “Home Hubs,” free from the constraints and potential changes by major cloud providers like Amazon and Google.

As tempered as it might have felt, there was a new year’s bounce and a feeling that as the landscape merged between frameworks, developers, and OEMs, direction was coming clearer and scenarios coming into focus. In the end, we just might want our AI companions (sometimes) and that moment of mood-altering Zen that only stacks of algorithms on multiple SoCs can provide mere mortals. aX

This article was originally published in The Audio Voice newsletter, (#500), January 23, 2025.

Source link

.png)