Audio Engineering Society European Convention in Warsaw, Poland

Immediately following the intense schedule of the High End show in Munich, Germany, audioXpress attended the 2025 Audio Engineering Society European Convention (May 22-24) in Warsaw, Poland. An exciting event where most deeper technical sessions focused on spatial and immersive audio. But what does Immersive even mean? What Is the Future of Spatial Audio for Consumers? Those were questions asked and debated at this AES convention, where leading audio engineers and researchers focused on the latest advancements in those audio applications.

For some reason, I had this happy memory in mind as I headed to Warsaw, Poland, for the 2025 AES European Convention (May 22-24), immediately following the intense schedule of the High End show in Munich, Germany. I think it is important to compare these events, just as a reminder that a direct connection between education and development, academia and industry, is critical to ensure the relevance and progress of audio. Without that connection, many perfectly valid research efforts tend to move in circles and never find actual adoption.

This, for me, was in clear evidence when I recently reported from the Global Audio Summit 2025, promoted in March of this year by the China Audio Industry Association (CAIA) in Shanghai. This was a conference where academic, scientific, engineering, and industry efforts perfectly converged— also under the hospices of an audio industry association. The big difference there was that every presentation placed all those research efforts under a product development perspective. Including when multiple sessions illustrated failed or less successful efforts, and I witnessed the presenters recommend that attendees save their time pursuing that approach and focus instead on new uncharted directions. This, for me, was a very refreshing and unique perspective.

Seeing competitive companies, discussing actual products that they developed, acknowledging research that had been conducted in universities and other entities, illustrated with slides explaining what failed to gain acceptance by real consumers in actual products changed my whole perception about the content that I normally see presented at international audio conferences. In a different way, the sessions and demonstrations that I witnessed at the AES Automotive Conference in Gothenburg provided a similar sense of connection with real applications that made the whole experience extremely valuable for those who attended.

Modern Warsaw

I probably should have made a fairer comparison between this 158th AES Convention in Warsaw and the previous AES Europe convention in Madrid, Spain, in 2024. That event was hosted by the Universidad Politécnica de Madrid, a vibrant academic institution, which is a natural environment to promote the AES International conventions, providing a valuable opportunity for researchers and students to interact with the global audio engineering community.

In Madrid (June 15-17, 2024), the focus was as usual on the more creative and artistic aspects of music production and recording, with the technical sessions focusing on immersive audio production and reproduction, room acoustics, and audio signal processing. Among the most recognized papers of that year, we find multichannel loudspeaker reproduction, spatial sound, acoustic simulation and auralization, and once again some studies oriented toward automotive applications.

As I headed for the ATM Studio — a media production hub in an industrial area of the city of Warsaw — I also had in mind many of the discussions and trends from the Munich show days before, marked by the progress in personal audio (headphones), high-quality reproduction, digital audio, audio electronics, and naturally loudspeaker technology (see my report here).

I know that the Audio Engineering Society also has scheduled the 2025 AES International Conference on Headphone Technology, taking place in August 27-29, in Finland. As it happened the year before with Automotive Audio, these AES-themed conferences tend to suck all the more relevant (and industry-oriented) content away from the general AES Conventions, so I was not expecting to see many sessions addressing hearables – which is probably the most relevant and exciting topic in audio these days (after automotive audio).

Anyway, after studying the recently published program and full schedule of nearly 100 conferences for the 2025 AES European Convention, I found more than enough content to motivate me to attend (it also helped that from Munich to Warsaw is such a short flight). And effectively from the moment we arrived at the ATM Studio, the environment couldn’t be more welcoming, as I was looking forward to the opportunity to mingle with all the other nearly 400 attendees. The energy from an audience with a strong local component of industry experts and students was noticeable. Many of the deeper technical sessions by leading audio engineers and researchers focused on the latest advancements in audio spatial audio and immersive audio applications. In fact, 34 of the sessions focused directly on spatial audio.

One major complaint was the fact that simple black curtains were being used to separate four of the auditoriums (set up within one of the ATM Studio sound stages), making some presentations hard to follow when sound was playing loudly in adjacent sessions.

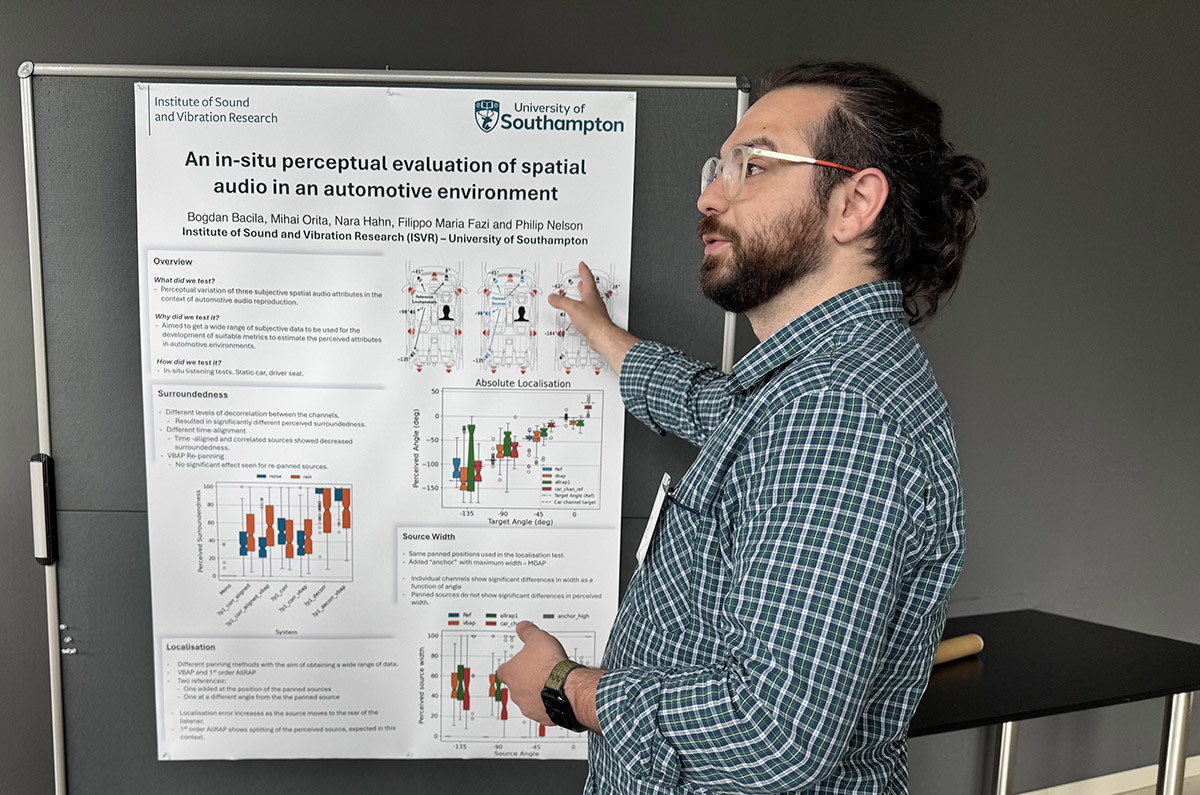

This AES European Convention benefited largely from the direct involvement of the local AES Polish section with the support of a very vibrant community of audio companies from Poland, which includes some important manufacturing operations, and above all a very strong academic community represented by strong institutions such as the Gdańsk University of Technology, AGH University of Science and Technology, Fryderyk Chopin University of Music, Wrocław University of Technology, Warsaw University of Technology, and others. One strong common component among the papers and posters presented by students and researchers (independently of the application field): spatial and binaural.

Immersiveness

When visiting an audio show such as AXPONA in the US, it’s always a special thing to find a room showcasing a multichannel system or home theater installation. It’s 99% a show focused on stereo. At the High End in Munich, where the convergence with a broader set of home audio and lifestyle-oriented brands is more prominent, we can find some really outstanding multichannel rooms, but again, we can say that the show is 90% stereo. This year, it was a notable event that the Kii Audio room had an impressive Dolby Atmos setup, including presentations by music producers, mastering engineers, and artists (Anette Askvik) detailing their vision for immersive audio. A welcome exception.

Is there a disconnect? Not really. We are going through a transition, the results of which are not yet clear. As Prof. Karlheinz Brandenburg (now founder and CEO at Brandenburg Labs GmbH) stated to the audience at one of the sessions of this AES Warsaw convention, “consumers have not yet experienced spatial audio.” His new company is now determined to make that a possibility – at least over headphones – but they say we are at least two years away from that. And the key, the company also details, lies in combining all the already studied elements, such as low-latency head-tracking, and combining them with Binaural Room Impulse Responses (BRIRs), which is how our brain knows not just where a sound comes from, but also what kind of space we’re in.

Maybe I am privileged to have already experienced a demo of the Brandenburg Labs Okeanos Pro system, which is now available for scientific research institutions and studios who want to achieve “life-like simulations of real speaker systems, allowing audio engineers to precisely control the spatial image of their mixes on headphones for the first time.” I know this works. Convincingly. But I still prefer to have the same level of experience in a room surrounded by speakers, or even in front of a speaker array with a convincing 3D spatialization implementation such as the solutions offered by Audioscenics.

Contradicting what Karlheinz Brandenburg stated in Warsaw, millions of consumers have experienced real Spatial Audio, but in the form of Dolby Atmos movie soundtracks they heard at movie theaters. But that’s something very few people even associate with listening to music. What he meant is that a truly convincing binaural representation of Spatial Audio isn’t yet available as such – at least for consumers.

But it’s neither clear what the intention or purpose of the whole discussion around Spatial Audio, immersive sound, 3D sound, and more, that is taking place at forums such as this AES Convention is. There is no consensus about what “Spatial Audio” is for music – as Apple knows well, even with all the efforts to promote Dolby Atmos Music on streaming services. The experience remains unconvincing for consumers over AirPods and other headphones, even with head-tracking. In contrast, it is very convincing when experienced inside a new electric vehicle fitted with a Dolby Atmos speaker system, as the automotive industry is now promoting.

All this was under discussion during the 2025 AES European Convention, where all the multiple angles of intense research are trying to meet the requirements for immersive audio experiences in entertainment, gaming, virtual reality (VR), and augmented reality (AR) applications. There is a general view in some circles that “immersive sound” and realistic 3D soundscapes will be preferred to stereo by consumers on their listening devices. This has a valid angle but doesn’t meet either the production format criteria or the consistency of experiences that most of the efforts focused on music and creative content are pursuing. Discussions around binaural recording and spatial sound processing are sometimes moving in conflicting directions in terms of the actual industry adoption of immersive audio for applications such as sound reinforcement and live sound events – as an example.

One thing is certain: when so many bright minds such as all the audio researchers that have presented at this AES convention converge in the same direction, something is bound to happen. I saw multiple references to Apple’s Vision Pro as a completely new platform that goes beyond everything that was tried before in VR. There is also a sense of the huge potential to explore binaural rendering of audio scenes captured with microphone arrays, creating realistic sound experiences to which consumers will respond enthusiastically. As Brandenburg Labs states, the only problem is just that the (reproduction) devices to do that are still not yet available to consumers.

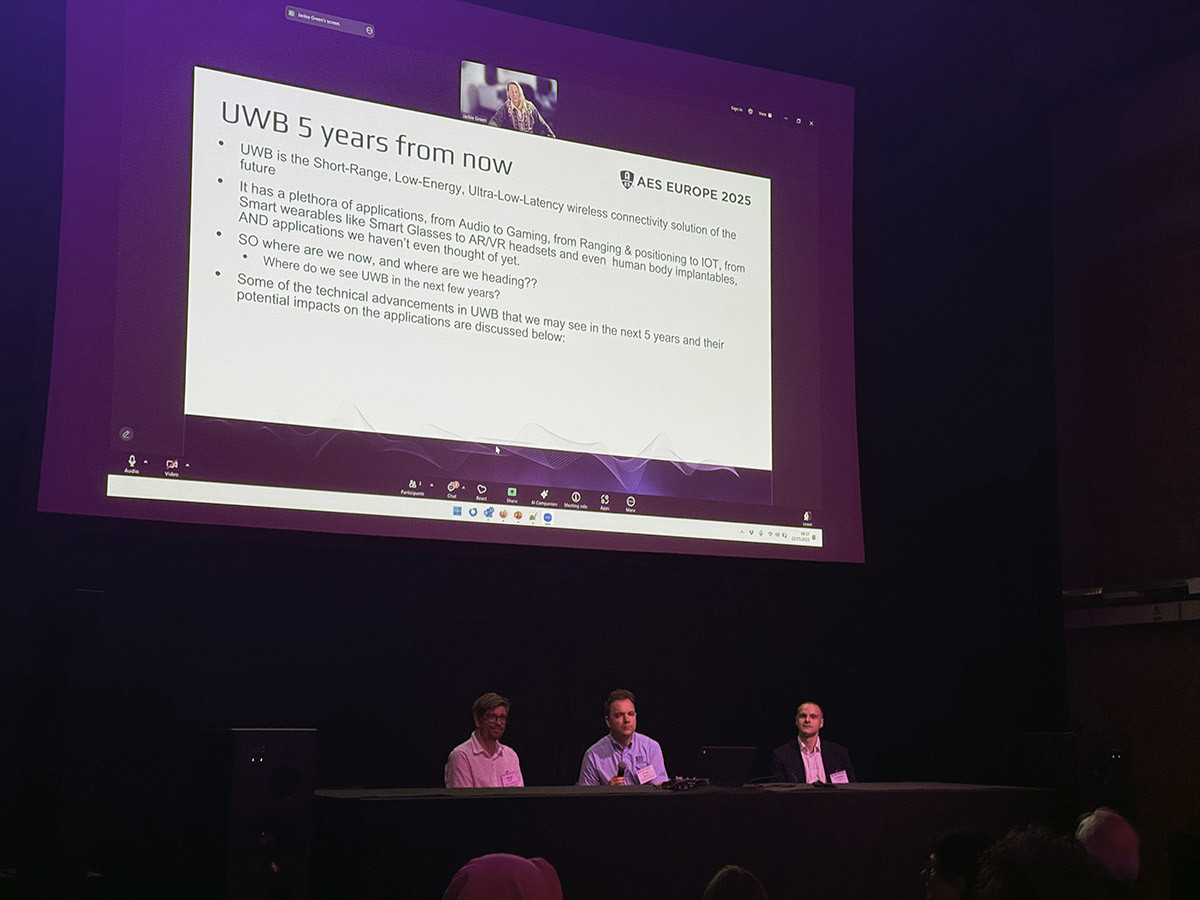

In a conference where Dolby was mentioned in most of the sessions, there was no one there to speak for the company. Which also explains why there was a panel where the question of “What Is the Future of Spatial Audio for Consumers?” was debated from five completely different perspectives. The panel, which was originally announced as a workshop panel, was actually one of the most interesting moments of this AES Europe convention and included Jake Hollebon (Audioscenic), Jan Skoglund (Google), Markus Zaunschirm (atmoky), Jean-Marc Jot (Virtuel Works), and Hyunkook Lee (Applied Psychoacoustics Lab, University of Huddersfield).

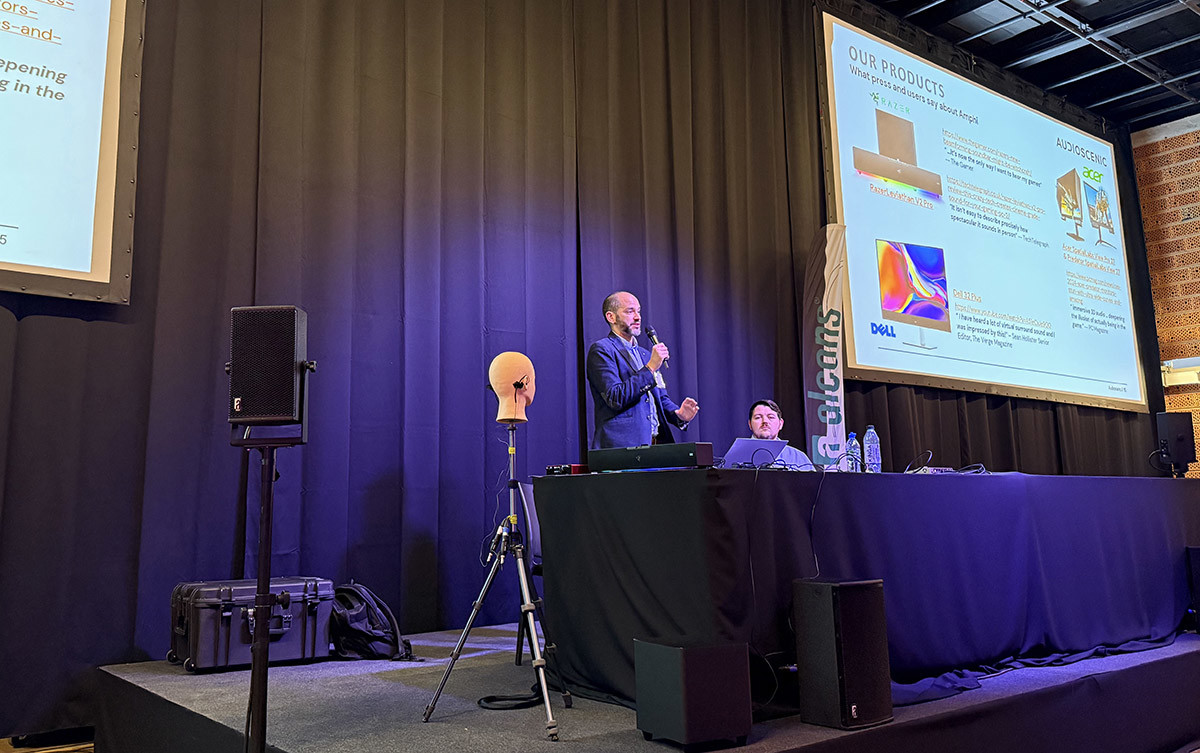

The standing-room-only session, moderated by Marcos Simón, Audioscenic CEO, reflected on the strong momentum for Spatial Audio technology and the assumption that “…we are at a tipping point where Spatial Audio availability is going to become mainstream.” Jacob Hollebon, Principal Research Engineer at Audioscenic, tried to explain how the key for this to happen is to make 3D spatial audio reproduction work in everyday products, from soundbars to TVs and laptops. Something that Audioscenic is actually turning into a commercial reality using its beamforming on loudspeaker arrays in combination with listener-adaptive position tracking.

This was a “reality” contrast with what Jan Skoglund described, basically reflecting the perspective of the Eclipsa Audio “open” immersive audio format that Google wants to use on YouTube, and which is based on the new Immersive Audio Model and Formats (IAMF) specification available under a royalty-free license from the Alliance for Open Media. As always – in my opinion – something that will be funded and promoted only until Google gets tired of it. And resulting in another format that basically intends to translate any immersive content, allowing everyone to continue to produce their 7.1.4 mixes to the Dolby Atmos standard.

Of course, the perspective on spatial audio from Markus Zaunschirm, the co-founder of atmoky, is also based on a very specific perspective of creating content for virtual/extended reality and gaming, where the boundaries continue to be the playback devices, but where production creativity has no boundaries. A broader perspective regarding applications was offered by Jean-Marc Jot, currently at Virtuel Works, which has previously worked on Spatial Audio efforts for companies such as iZotope, Magic Leap, DTS, Creative, and IRCAM, having left a clear mark on the industry with many of the tools that are currently in use for production and live sound.

Among those is SPAT (from Spatialisateur in French), the object-based and perceptual immersive mixing solution originally researched at the French Institute IRCAM, and that motivated Harman Professional to acquire FLUX Software Engineering, the spin-off entity created to commercialize SPAT. From Jean-Marc Jot’s perspective, the focus needs to be on embracing format-agnostic solutions for “true-to-life, timbre-preserving personal spatial audio experiences” that can apply to both entertainment and business applications and with any binaural audio rendering engine.

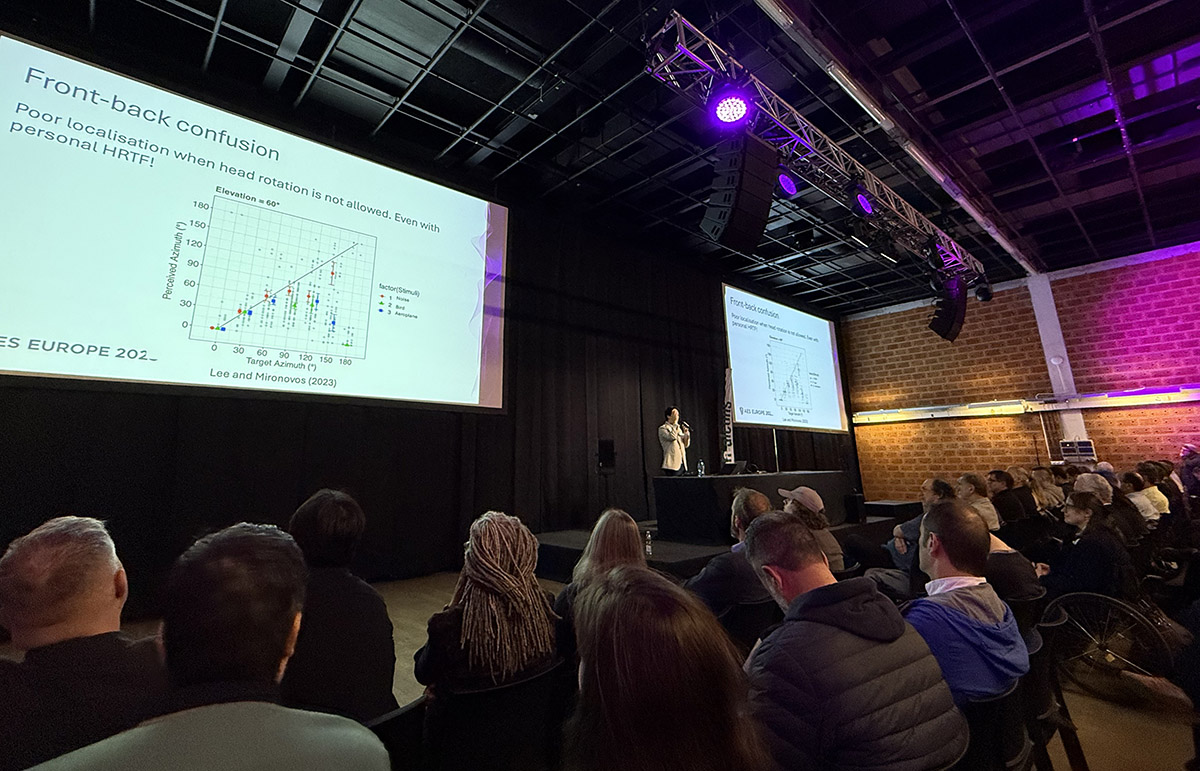

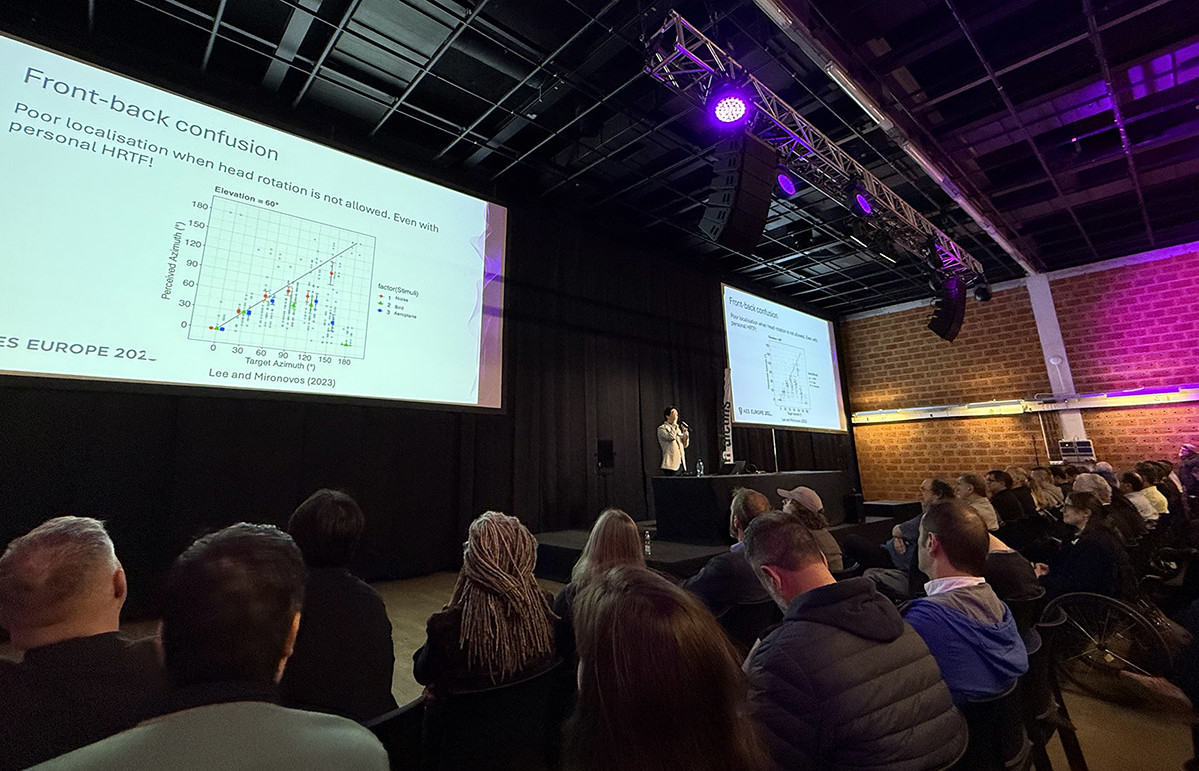

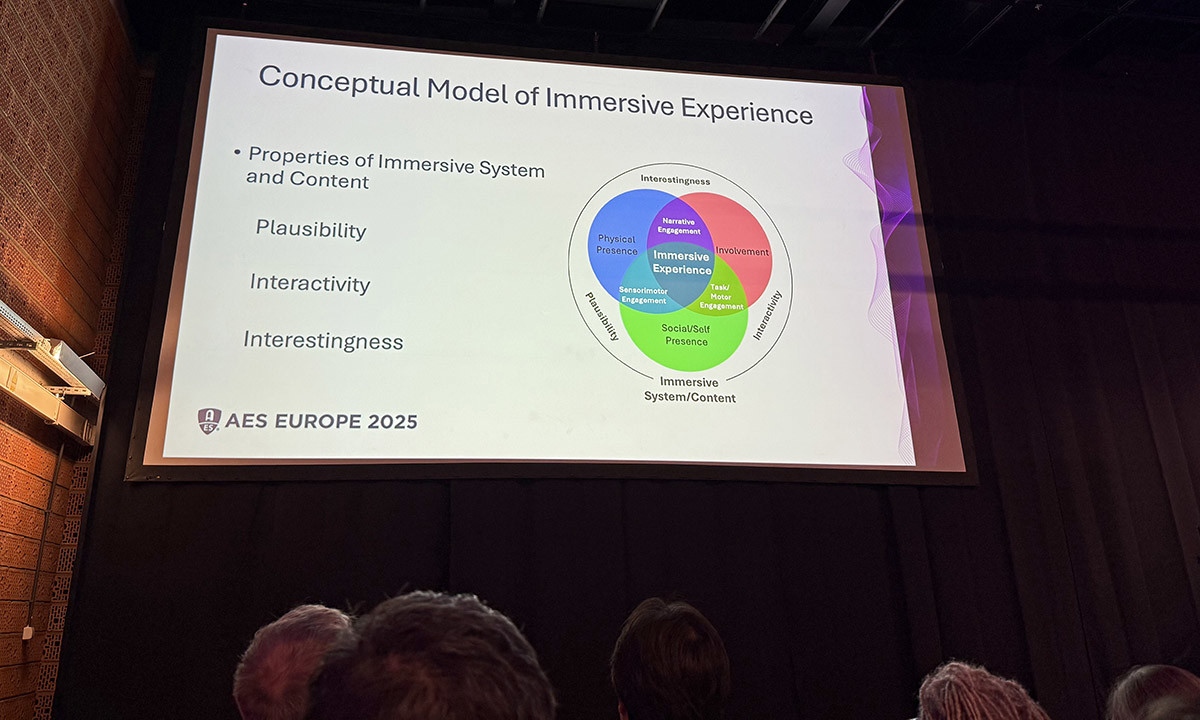

And probably the most colorful and inspiring presentation was from Hyunkook Lee, the notable University of Huddersfield Professor and founder of the Applied Psychoacoustics Lab (the creators of the Virtuoso binaural renderer), who provoked the audience by questioning everything and teasing with the concept that even mono can be immersive, depending on how plausible, interesting, and interactive an experience can be. A conceptual model that he expanded on from the day before, in his Keynote presentation during the convention’s opening ceremony.

Titled, “Dimensions of Immersive Audio Experiences,” Hyunkook Lee characterized the whole complexity of what is taking place in music, film, gaming, and virtual/augmented reality, where the term “immersive” is used as an umbrella term for spatial and 3D audio. He says this lack of understanding on what “immersive” truly means leads to consumer confusion and disappointment when content is labelled but not perceived as “immersive.” In response, he expanded on his field of research and the fundamentals for a conceptual model, but leaving more open questions than consensus.

Overall, the 2025 Warsaw AES conference was extremely rich in content addressing the technological pieces of this Spatial Audio and immersive puzzle, providing a good indication that something is bound to happen very soon in multiple fields. But we are probably, as Prof. Brandenburg said, about two years away from having something convincing for consumers. This is in line with what is also on the horizon for modern vehicles fitted with Dolby Atmos factory sound systems to become mainstream in more regions of the world than China. I sincerely hope that the upcoming 2025 AES International Conference on Headphone Technology, Finland, offers a clearer picture of this becoming a commercial reality in that time frame.

Meanwhile, a reminder that the 2025 AES Show will be back on the West Coast and takes place in Long Beach, CA, from October 23-25. aX

This article was originally published in The Audio Voice newsletter, (#517), June 12, 2025.

Source link

-SOURCE-Bitwarden.jpg)